Whenever you release a site for which it's somewhat important that it's indexed in Google: check your robots.txt.

Last month a new website was released that I had worked on for 2 years. Somehow robots.txt wasn't checked after release. I was curious how the new site was doing in Google but when checking the indexed pages (using the super useful site: operator) I noticed some weird things, pages in Russian were showing on top whereas the Russian language was actually to disappear from the site. So the next thing to check was robots.txt. It looked a bit like this:

User-agent: * Disallow: /

Which was the exact robots.txt that was used on the development site. This is good for long-term projects. You never know how Googlebot might find your dev site, and once it's found you'll have to change your URL unless you don't care that random people (including folks with bad intentions such as spammers) are looking over your shoulder.

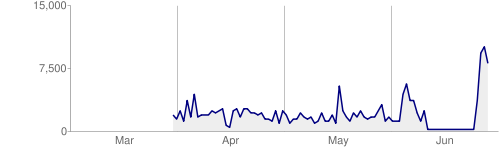

This is how it the crawl rate graph looked in Google Webmasters after fixing this:

Also, if you're on Drupal 6, it's good to uncomment the /sites/ line in robots.txt as it will stop Google from indexing any of your images and other files that are stored in /sites/:

# Disallow: /sites/